Participation to the WTF Workshop 2022

We presented our failures and troubles at WTF 2022.

Last week we presented online at the Working with Troubles and Failures (WTF) workshop, a workshop that “bring together a multidisciplinary group of researchers from the fields of robotics, human-Robot Interaction (HRI), natural language processing, conversation analysis and pragmatics. The central idea of the workshop is to discuss the multitude of failures of speech interfaces openly and, if possible, systematically, in the hope of identifying the most fruitful directions for overcoming these failures in future systems. The envisioned types of failures may range from failures of speech recognition to pragmatic failures and infelicities.”

Talking from the point of view of conversation analysts, we think that the most pressing issue for speech interfaces is certainly the modelization of (let’s say) “context” as a resource for action rather than a constraint to an ideal interaction scenario. This modelization could begin with how CA accounts for it : sequentiality “at all points”, because interaction is a temporally continuous process and not a logical one. This way, we could ease the same “pragmatic turn” in speech interfaces that happened for linguistics. It’s not a simple task. For example, we see how the informatics literature on modelling the Turn-Taking System (and the definition of turn-relevant positions) is oriented towards the processing of the audio/video signal of one single turn (eg. Skantze 2020) vs. a “sequence”. “Context” is recognized as relevant but too difficult to process. Meanwhile, CA is in this “in-between” state where

- its analyses of how actions and their design are indexing the context (activities, identities, environment…) is quite cumulative but at the same time

- it does not propose to formalize how these contextual components weight and interact with each other with respect to rights and obligations to initiate/respond and how to.

This challenge involves a lot of work on data.

Here are some insights into what we presented at this workshop :

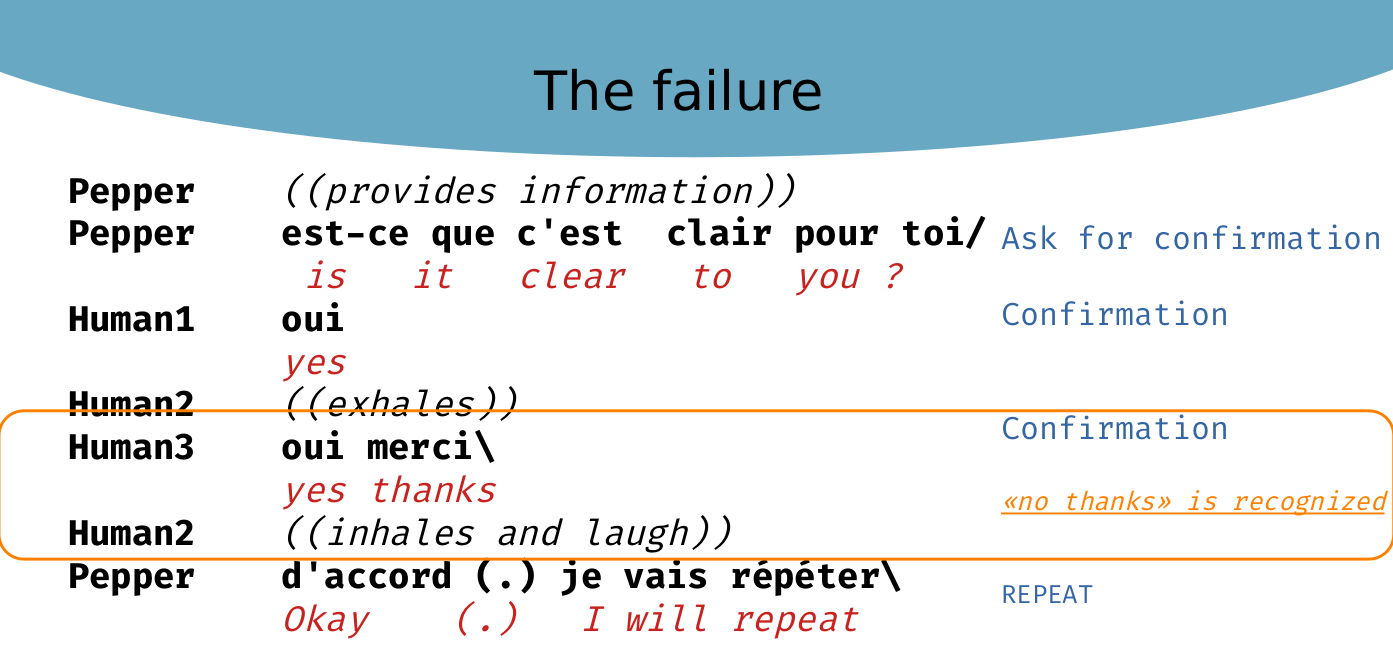

A case of failure

One failure that we presented is on the slide above : the Pepper robot succeeded to recognise the student question and it has responded to. Then pepper asks if the information provided is clear enough. If yes, Pepper initiates a pre-closing sequence, if no, Pepper repeats the previous information. Here a first human says “yes” then an other one says “yes thanks” and Pepper recognize this utterance as “no thanks”. The consequences are quite dramatic as these responses lead to very different next activities. Now, while we could see this failure as just a word recognition problem between “yes” and “no”, it is in fact the result of design choices that address significant issues for us.

The design

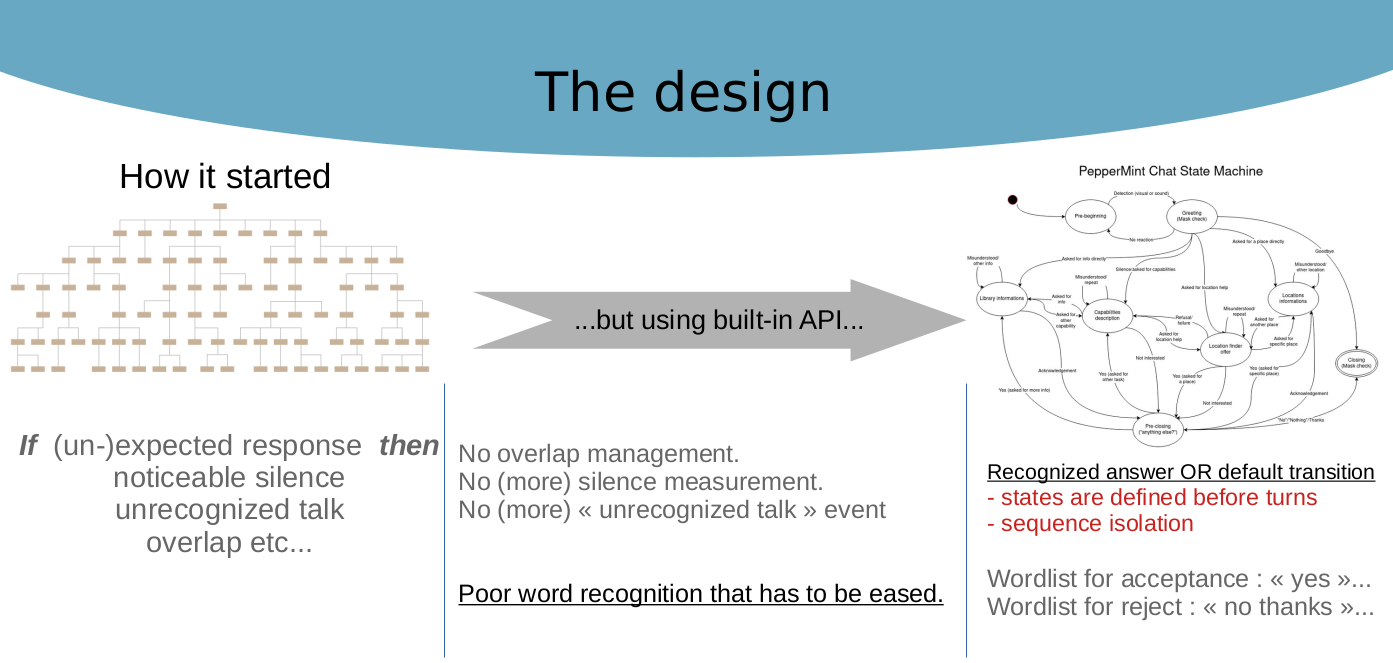

How do we got here ? We work with the idea that a step-by-step approach based on data allows us to better understand what are the users expectations towards Pepper in this situation. Thus, we have developed a first version of the robot, based on the built-in functionalities, in order to collect interactional data. The scenario started as a diagram tree that quickly developed into multiple possibilities as we wanted to treat generic sequential phenomena like overlap, silences, repair and reformulations. The big picture was complex but building the scenario was rather simple as it could be accomplished systematically. However, we realized that what we consider as accountable phenomena in CA is not that easy to account for as programming events. Especially, we encountered several limitations due to the built-in functionalities and the poor performances of word and talk recognition in natural, noisy environments such as the library entrance.

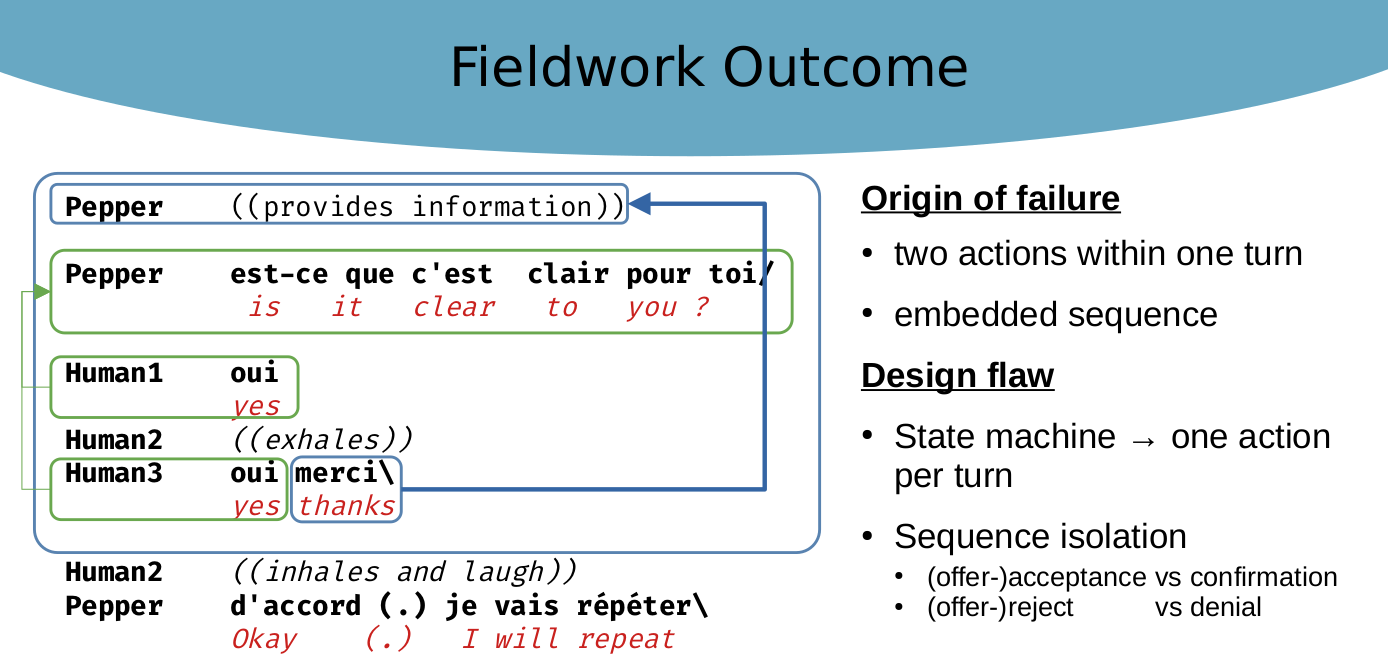

After several discussions, we agreed to design our scenario as a state machine and we defined wordlists to recognize, in order to create rules based on a list match. Each state was setting up one action and a response to this action. For the example above, we created wordlists for the acceptance or reject of offers. In order to improve word recognition on such small words (“yes” vs. “no”), we added “no thanks” to the “reject” list. Indeed, CA litterature shows that a dispreferred response is more elaborated and accounted. “No thanks” is a quite perspicuous and generic turn in that position, while “yes thanks” is not expected as a single action. In order to simplify the script, we merged in the same list words that could be recognized as accomplishing an offer reject but also denials. We did not see how this could be a problem as we were now focused on separated and closed so-called “states”. Asking for clearance was one separate action.

The problem

Now, when we look at what happened, the problem is that when Pepper ask the user to confirm, this sequence might in fact be embedded into the question/answer sequence that has previously been initiated. The users said “yes thanks” because they accomplish two actions in the same turn

- they confirmed that they understood well and

- they had finally the opportunity to thank the robot for his service : the first structurally provided sequential slot.

Good news is that the french “merci” (“thank you”) actually helps the recognition ! But the problem is that we designed the turn recognition after having set up the state machine, ascribing one action per turn. This way, we no longer foresee the larger sequential environment. They were not much people that would even get to this point of having been delivered an information. And when they did, that means that they had aligned with the robot and that they were satisfied with the interaction (indeed, they were thanking him). So, at this point, when they aknowledged that the robot could’nt understand such a simple action, and when they saw that the robot was about to repeat the entire response, each time the users started to laugh aloud, tried to interrupt him or they just left.